Conversation Garden

Fall 2020

3 weeks

Computational Design

Interaction Design

Design + Illustration

Mimi Jiao

Charmaine Qiu

Development

Alice Fang

Swan Carpenter

Patricia Yu

3 weeks

Computational Design

Interaction Design

Design + Illustration

Mimi Jiao

Charmaine Qiu

Development

Alice Fang

Swan Carpenter

Patricia Yu

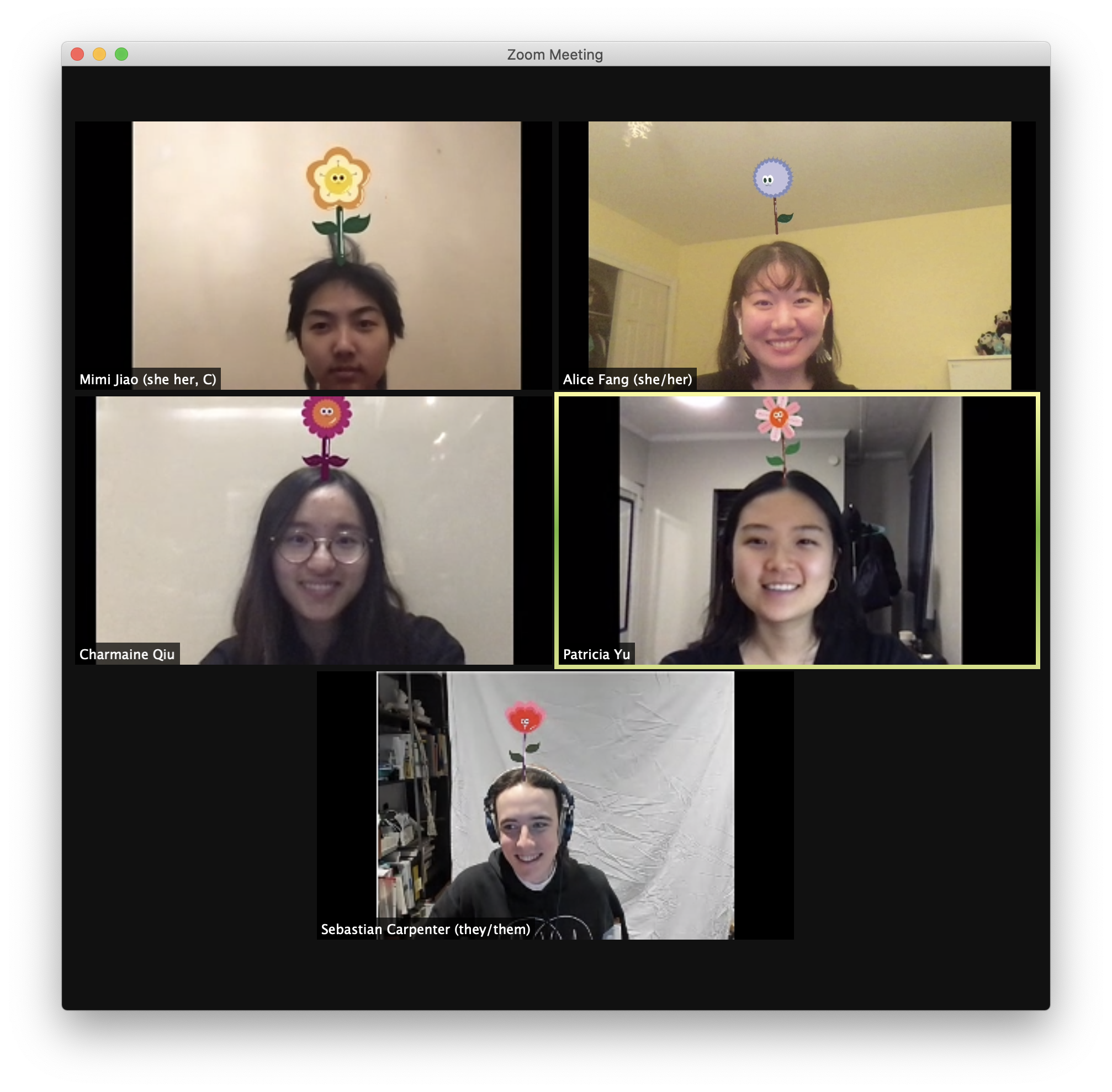

Making conversations more whimsical on Zoom~

These past few months, we’ve been Zooming and FaceTiming so much more than we had pre-COVID. For remote schooling in particular, classroom discussions have fallen flat, as students refuse to turn on their cameras or microphones. How can we incentivize participation and discussion in a remote learning environment?

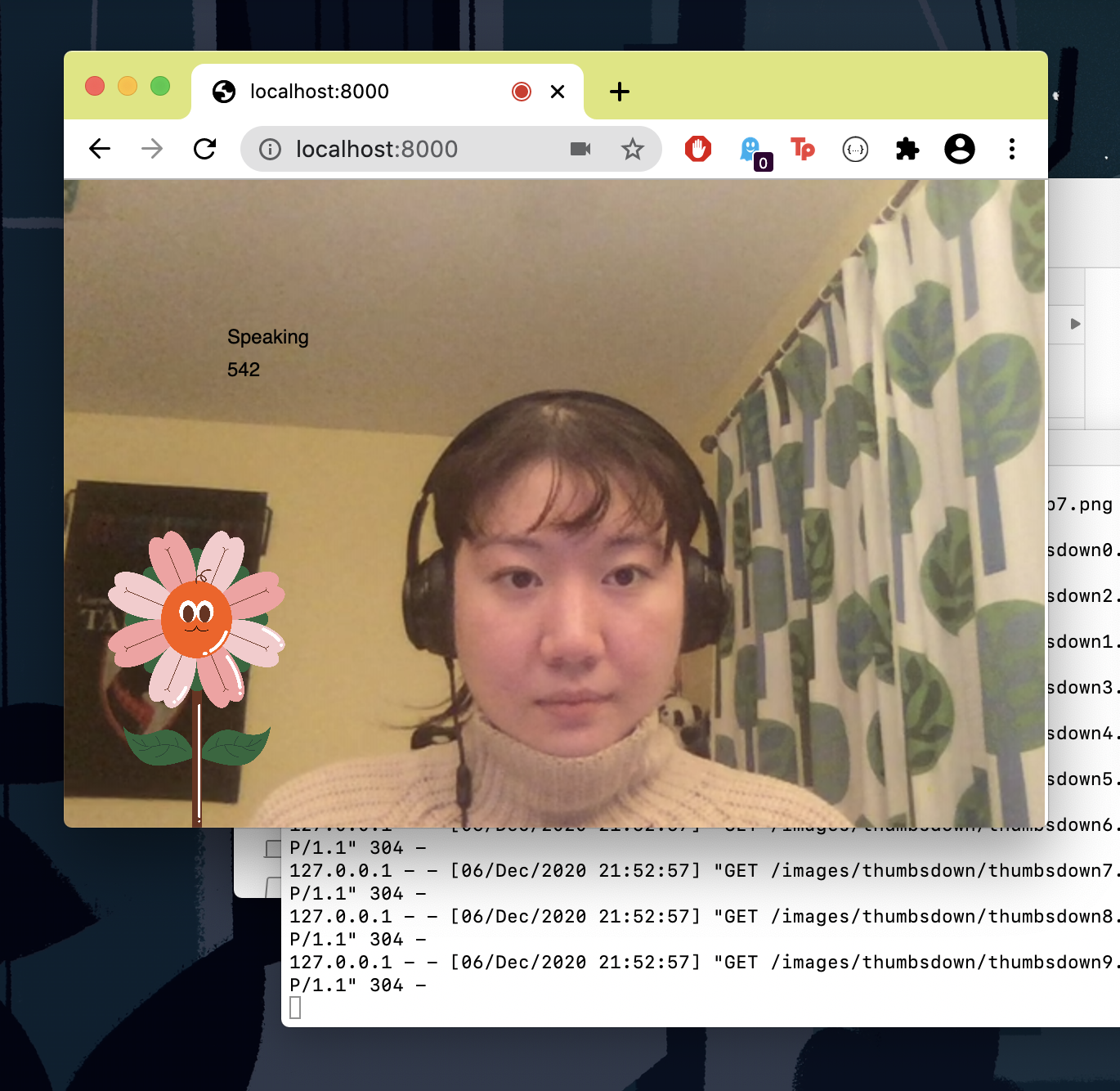

Using Google’s Teachable Machine, p5.js, and Open Broadcast Software (OBS), we designed and developed moving flower avatars that grow and interact based on your speech and gestures.

The more you speak, the more excited your flower friend becomes, and the taller they grow! If you raise your hand, or give a thumbs up, your flower friend will mimic you as well!

Live p5.js sketch

These past few months, we’ve been Zooming and FaceTiming so much more than we had pre-COVID. For remote schooling in particular, classroom discussions have fallen flat, as students refuse to turn on their cameras or microphones. How can we incentivize participation and discussion in a remote learning environment?

Using Google’s Teachable Machine, p5.js, and Open Broadcast Software (OBS), we designed and developed moving flower avatars that grow and interact based on your speech and gestures.

The more you speak, the more excited your flower friend becomes, and the taller they grow! If you raise your hand, or give a thumbs up, your flower friend will mimic you as well!

Live p5.js sketch

Zoom currently does not support third-party filters (outside of Snapchat Lens), so if you want to create your own flower avatar, you’ll need to install Open Broadcast Software and use its virtual camera with Zoom. Dan Schiffman’s tutorial is super comprehensive, if you want to set it up!

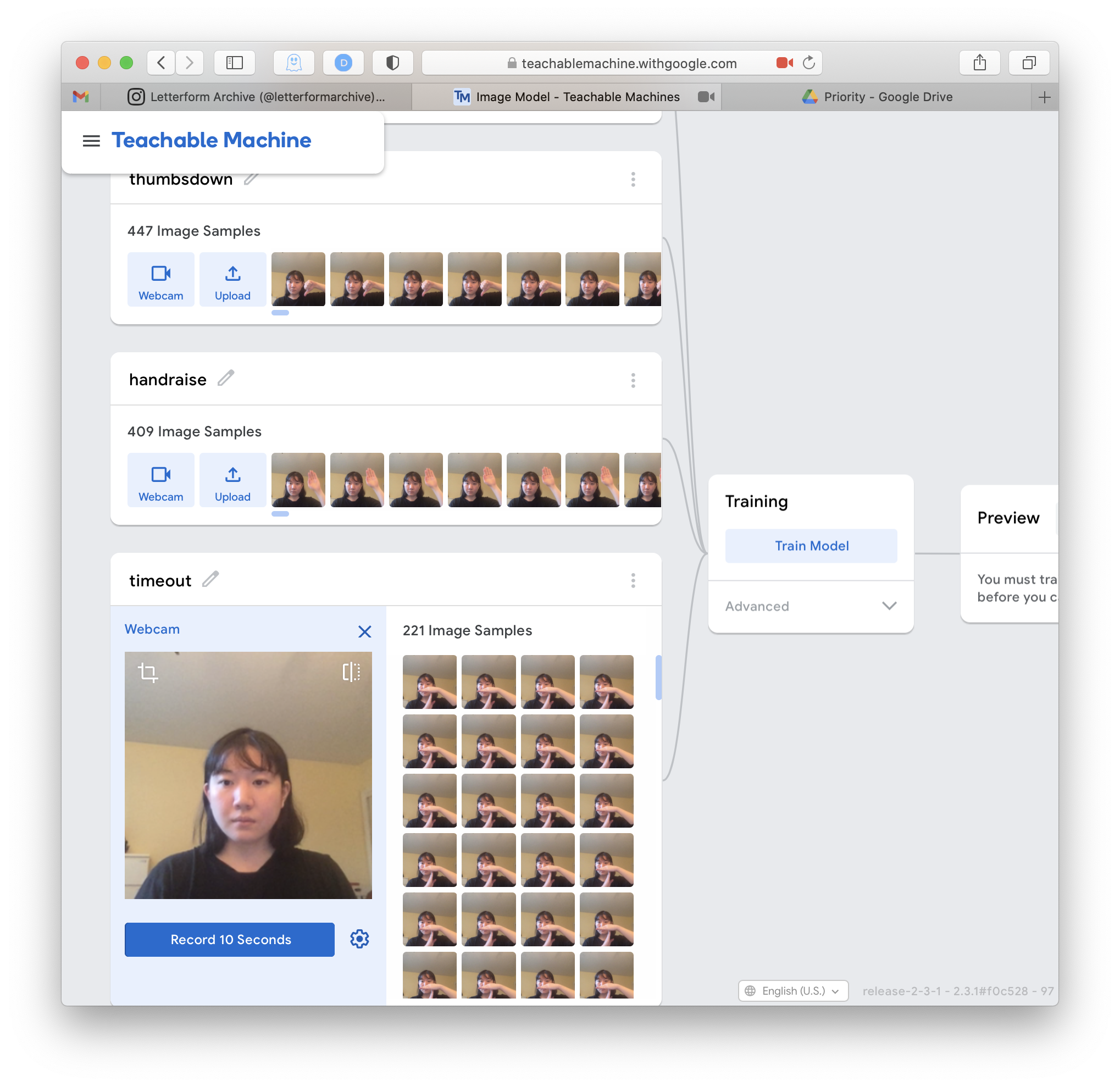

Once you have OBS, you’ll also have to train your own Teachable Machine image model for the following gestures: thumbs up, thumbs down, hand raise, timeout, and neutral. You can then insert your image model URL into the live code to (hopefully) see a flower avatar on your head!

///

In the process of this project, we had to figure out the limitations of Teachable Machine. The sound model that can categorize speaking vs. non speaking audio worked fairly consistently for all five of us. However, we soon realized for the gestures to work, we would have to train our own gesture image models.

Once you have OBS, you’ll also have to train your own Teachable Machine image model for the following gestures: thumbs up, thumbs down, hand raise, timeout, and neutral. You can then insert your image model URL into the live code to (hopefully) see a flower avatar on your head!

///

In the process of this project, we had to figure out the limitations of Teachable Machine. The sound model that can categorize speaking vs. non speaking audio worked fairly consistently for all five of us. However, we soon realized for the gestures to work, we would have to train our own gesture image models.